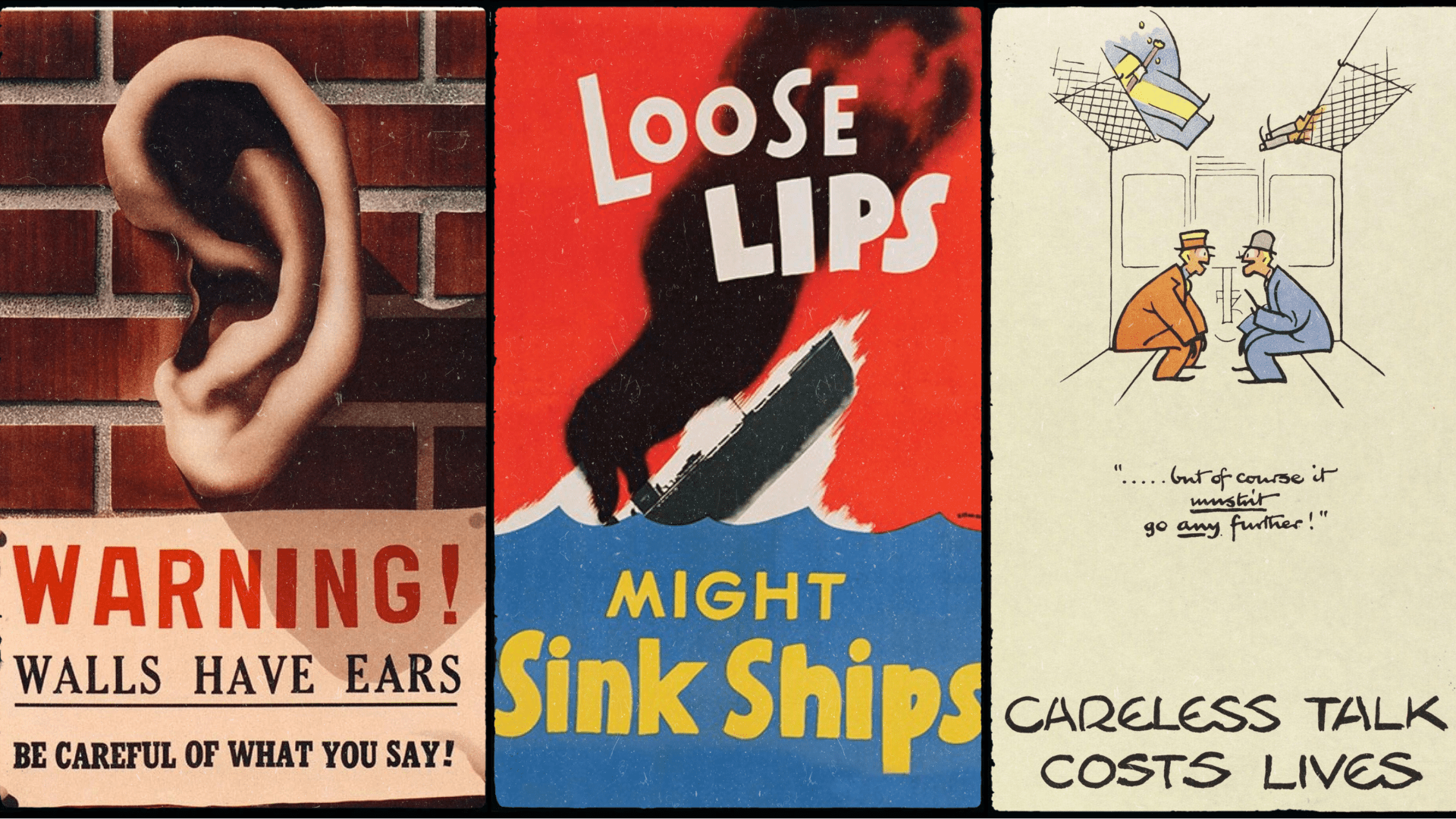

In a recent viral clip, Dr Richard Susskind warns us to be careful what you tell public AI, as it is not always safe to share information. Artificial intelligence has become part of daily life. Tools like ChatGPT and other public AI systems can write emails, answer questions, and explain complex topics in simple words. Many people now see them as helpers that are always available but are they safe?

It is easy to get carried away. If a public AI can write an essay or draft a letter, why not share company knowledge with it as well? This is where Dr Richard Susskind’s warning comes in. In a short clip he reminds us to be careful what we tell public AI systems. They may be powerful, but they are not always safe places to share information.

This blog explains why that matters, the risks of public AI, and how organisations can use private AI engines as part of a secure AI for business approach to keep their knowledge safe.

Why Public AI Is So Tempting

People forget they should be careful what you tell public AI when uploading training guides or policies. The attraction of public AI is clear. You type in a question and get an answer in seconds. Need a contract summary? It can help. Need a first draft of a presentation? It can do that too.

Because of this speed, many people think about going one step further. They wonder if it makes sense to upload training guides, policies, or even customer information to these tools so that staff can get quick answers but uploading that data places your AI knowledge base in the public domain.

At first glance this seems like a clever idea. In reality it can be very risky.

5 Risks If You Are Not Careful What You Tell Public AI

Here are the main reasons we need to be careful.

- Data leakage

Once you give information to a public AI, you do not fully control where it goes. Some AI providers store inputs to improve their systems. Even if they promise privacy, you cannot be completely sure your data will stay safe. - Loss of company secrets

Businesses often have unique ways of working, pricing methods, or trade secrets. If these are shared with a public AI, there is a risk that they will be used to train future systems or show up in other contexts. - Breaking the law

Laws such as GDPR in the UK and Europe require organisations to keep personal data secure. Sharing customer information with a public AI could put a company in breach of these rules. That can lead to fines and loss of trust. - Wrong answers

AI systems sometimes make things up. If you rely on them for important internal knowledge, staff could end up acting on incorrect information. - Lack of control

With public AI you do not set the rules. The provider decides how the system is run. Your organisation has no control over how information is handled.

Why Private AI Is Safer

There is a better way. Instead of using public AI for sensitive information, companies can build or use private AI engines on their own servers or trusted cloud platforms.

The benefits are clear.

- You stay in control. You decide where data is stored and who has access to it.

- Custom fit. The AI can be trained only on your documents and processes, making it more relevant and accurate.

- Easier to follow the rules. With private AI you can show regulators that data has not left your control.

- More trust. Staff will be more confident using AI if they know it is secure and private.

Private AI gives you the benefits of automation without the risk of losing control.

How AskELIE Keeps AI Secure

At askelie, we have built our own large language model (LLM) that is hosted on private servers. This means your knowledge never leaves a controlled environment. The system can be fully ring-fenced and even cut off from the outside world when needed, giving complete assurance that sensitive data stays private. For organisations that want the benefits of AI without the risk of public exposure, ELIE provides secure AI for business, combining speed, accuracy, and compliance with full control.

Building A Safe Internal Knowledge Base

Many companies struggle with intranets and shared drives that staff do not use. AI promises to fix this problem by turning those documents into an easy to use knowledge base. Staff can simply ask a question and get the right answer.

To build such a system safely, there are a few important steps.

- Label documents. Decide which documents are public, internal, or confidential. Only safe categories should be included in your AI system.

- Control access. Make sure only the right people can upload or view sensitive content.

- Keep track. Record when documents are added or updated. This helps with both security and audits.

- Check the model. Document how your AI is trained and updated so you always know what data it is using.

- Protect the system. Use encryption and backups in the same way you would for any IT system.

- Train your staff. Teach employees what can and cannot be shared with AI tools.

These simple steps keep knowledge safe while still making it easy to use.

How To Get Started

If your organisation is considering using AI to manage knowledge, start small.

- Begin with an audit of your data. Work out which information would be useful for staff but harmful if it leaked.

- Decide on sensitivity levels. Some data is fine for AI use, some is not.

- Pick your infrastructure. Choose a private cloud or on-premise system that matches your security needs.

- Write a clear policy for staff. Set out when to use public AI tools and when to stick to the private system.

- Run a small pilot project in one team, learn from it, and then expand.

When setting policy, remind staff to be careful what you tell public AI so they do not share sensitive knowledge by mistake. Treat AI as both a technology project and a governance project. Doing so reduces risks and builds confidence.

Using Public And Private AI Together

Public AI still has its place. It is very useful for low risk tasks such as drafting general text, creating ideas, or explaining common topics. The danger is when sensitive or unique knowledge is shared without thinking.

The best approach is to use public AI for general work and private AI for company knowledge. Think of public AI as a casual adviser for simple questions, and private AI as the trusted expert who knows your business inside out but never shares secrets.

This balance allows you to get the benefits of both while avoiding the dangers.

Conclusion: Why You Must Be Careful What You Tell Public AI

Dr Richard Susskind’s warning is clear. Be careful what you tell public AI. Knowledge is the most valuable asset an organisation has. Once it is shared outside your control, it can be very hard to get it back.

Public AI is excellent for general queries and everyday help. Private AI is essential for internal knowledge and sensitive data. The future is likely to be a mix of both.

Before you upload a document or process into a public system, stop and ask yourself a simple question. Would I be happy if this information appeared outside my organisation? If the answer is no, keep it private.

AI is a powerful tool. Use it wisely, protect your knowledge, and it will help your organisation thrive without putting what matters most at risk.

Comments are closed